Every day we grasp and move objects: We hold a pen to write, bring a toothbrush to our mouth to brush our teeth, and pick up a glass to drink. Even the latter is not possible for many paraplegic people. Grasping and holding are elementary, physical abilities that enable us to live autonomously.

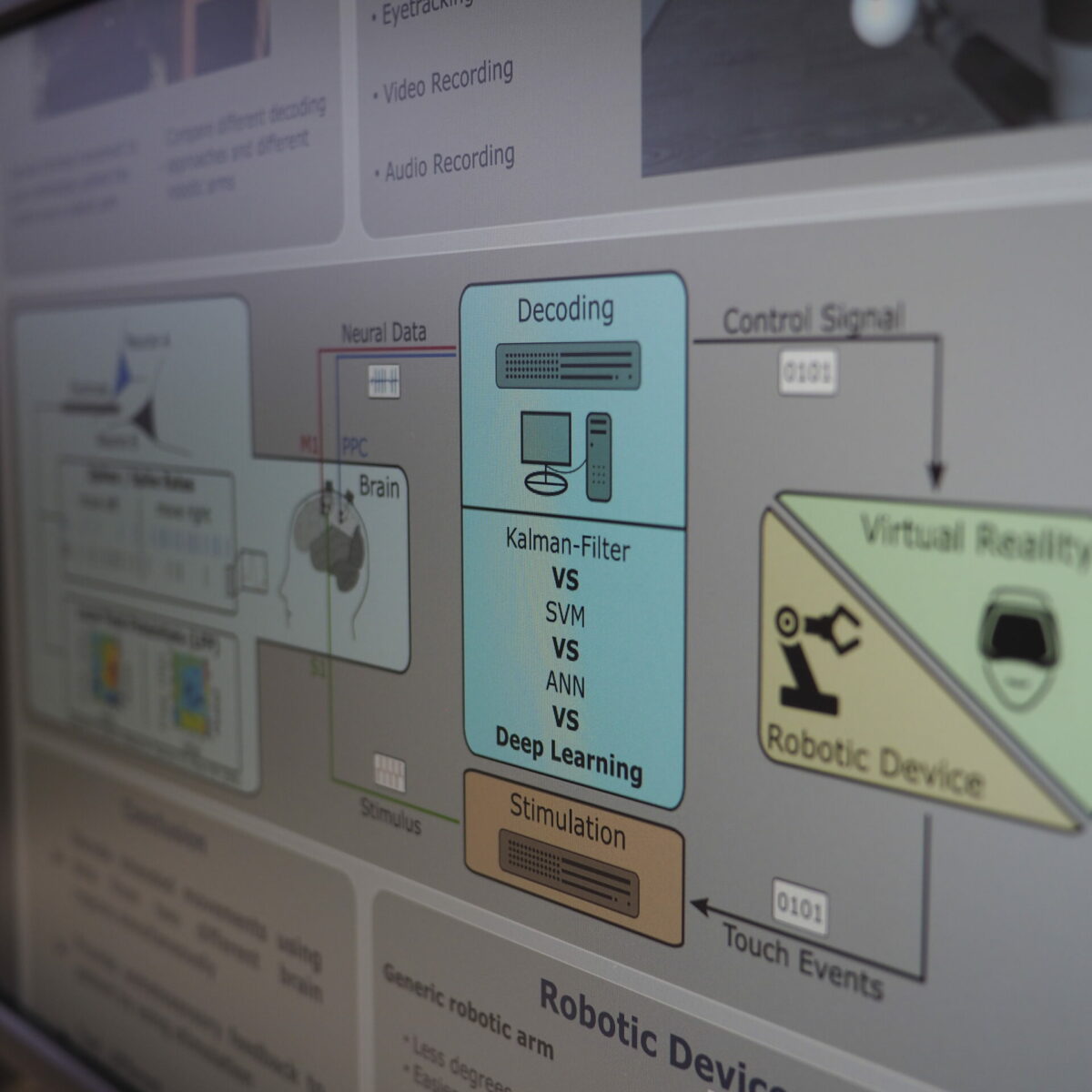

To help paralysed patients and enable them to live more independently, we are currently developing advanced and pioneering neuroprostheses in our research group. To do this, we are using a highly interdisciplinary approach involving neuroscience, neurotechnology, machine learning and virtual reality. We use our expertise in these areas to develop so-called ‘brain-computer interfaces’ (BCI). A BCI is a system that enables direct communication between the brain and computers and can, among other things, bridge a damaged spinal cord. Neuronal signals are ‘read’ from the brain and the patient’s movement intentions are decoded with the help of special software. These decoded movement intentions are then used to control prostheses. This will enable paralysed patients to regain independence and thus significantly improve their quality of life.

To achieve this goal, however, we need to better understand how the brain processes sensory input and motor output to generate movement intentions. But how does the brain learn to control a BCI system? How do individual neurons select when learning new tasks? And how does the brain make the decision to select a particular object from a set of many possible ones?

We are exploring these and other questions in our team of experts. The cooperation with Knappschaftskrankenhaus also enables us to work closely with local physicians and patients. To also advance the further development of robotic prostheses, we also collaborate with a local robotics lab to achieve an efficient synthesis between technology and new findings.